The future of business intelligence is shifting fast. Hiring Tableau developers with crossover skills in data engineering, AI, and cloud architecture will become indispensable assets guiding data strategy for leading organizations racing to unlock growth.

Table of Contents

Are you ready to expand your toolkit beyond traditional SQL and dashboard design?

Read on for an insider’s overview of paradigm-redefining advancements arriving over the next year that will separate progressive developers from the pack.

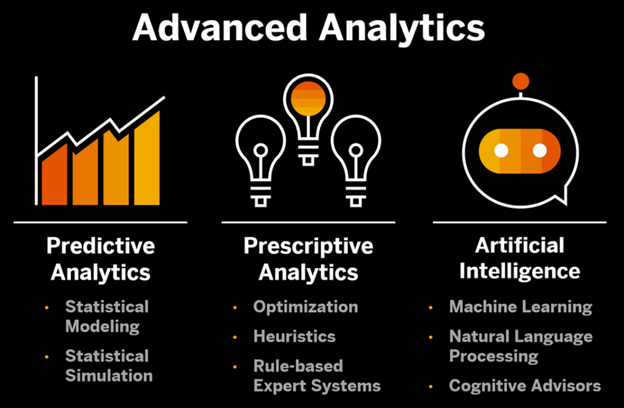

Traditionally, bespoke predictive models required specialized data scientists using niche programming languages like Python or R.

Lengthy development cycles limited business agility to iterate enhancements. Tableau 2024 bridges this gap by embedding intuitive drag-and-drop tools that democratize AI even for non-coders.

For instance, the Einstein Discovery extension lets developers integrate predictive capabilities using simple workflow steps.

Clean and shape data, input goals, select auto-generated models, then explain or tune outputs–no ML expertise needed.

Users can even create custom Einstein predictions using natural language and Excel-like pointing and clicking.

Other low-code options like Azure Synapse pipelines speed up complex data preparation tasks with guided augmentation.

As barriers to leveraging data science models fall, developers play a key role in demystifying AI adoption for business teams and prioritizing transparency and audibility safeguards against risks like bias.

Curating governed datasets for broad internal use accelerates enterprise-wide augmentation. Exciting times ahead as developers catalyze innovation diffusion!

Unleashing Live Data Streams at Scale

Static databases are table stakes. Future-focused developers architect systems harnessing real-time data flows unlocking unprecedented business value.

Tableau helps navigate data’s dynamic future through flow-native offerings like Tableau Data Stream and Tableau Hyper In-Memory Analytics.

Tableau Data Streams allow blending fast-updating data like IoT sensor metrics or customer clickstreams with existing data warehouses without migration.

Custom data flows integrate within minutes using easy point-and-click plus built-in stream partitioning and scheduling to optimize manageability at scale.

Toggle views assess stream health. Hope you’re ready to handle billions of rows!

For faster analysis across even the highest volume data sets with the lowest latency, Hyper’s sub-second query speeds empower rapid-fire iteration.

Vectorized computing executes complex analytical queries with stunning performance regardless of data size, structure, or format. Hyper handles hundreds of billions of rows on a single node.

Cloud-Native Architecture for Maximum Flexibility

On-prem servers won’t cut it to deliver experiences matching the pace of business innovation.

Developers now architect systems leveraging the public cloud for essential attributes like elasticity, global availability, and managed infrastructure key to analytics success.

Good news—Tableau’s multi-cloud ecosystem makes a smooth transition to the cloud easy while avoiding vendor lock-in headaches.

Deploy exactly where needed—on Tableau Server via AWS, Azure, or Google Cloud Platform.

Containerization via Kubernetes maximizes portability to relocate workloads anytime. Plus cloud resource optimization ensures cost-efficiency even at scale.

Moving fully to the cloud unlocks new flexibility to instantly spin up production-grade analytics environments and dismantle them when done.

Tableau Blueprints configures full tech stacks end-to-end via Infrastructure-as-Code, integrating data and analytics tiers automatically.

This facilitates seamlessly embedding analytics directly into other systems—a key step towards pervasive, decentralized intelligence.

Analytics Automation to Augment Innovation

Dynamic data environments demand developers improve efficiency in coding analytical logic to free up strategic bandwidth.

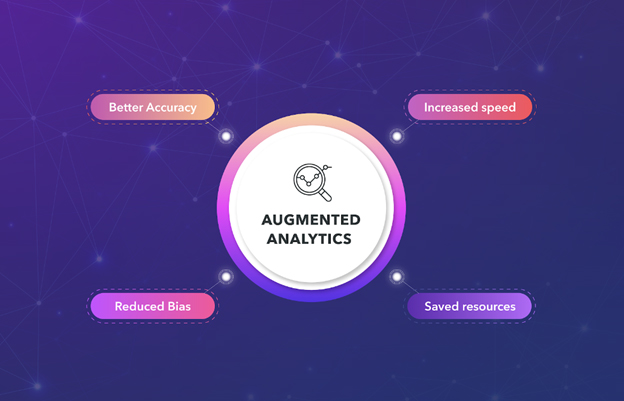

Tableau answers with augmented analytics features applying AI to help automate rote tasks so we can focus innovation on the hardest business problems.

New capabilities like Assisted Insights and Explain Data reduce heavy lifting during analytics workflows.

For example, Tableau automatically explores data and suggests aggregations, categorizations, statistical tests, best chart types, and other steps to achieve goals—completely customizable one-click actions based on best practices if we choose.

Explain Data speed insights by scanning data to detect drivers behind outcomes, visually mapping factors by degree of impact.

Tableau’s Einstein Discovery takes auto-piloting further by generating fully custom predictive models tailored to our needs in minutes with limited coding.

Just input data, define objectives, then review auto-created explainable AI models selecting the best performer. Or leverage Smart Narratives to automatically generate presentation-ready reports explaining key influences driving KPIs.

Behind the scenes, capabilities leverage external processing power in the cloud so onboard automation never strains device resources.

As developers, we shape the direction of auto-generation while benefiting from extra bandwidth to build programs for bleeding edge use cases manually.

Together, a collaboration between human creativity and machine productivity cultivates fertile ground for our organizations’ next analytics breakthrough!

Building Trustworthy AI Ethics into Code

Implementing analytics to aid critical decisions comes with ethical burdens developers help organizations navigate responsibly.

Tableau’s industry-leading transparency features embedded throughout augmented offerings establish trustworthy AI safeguards upfront mitigating downstream risks from underlying models.

For instance, Tableau Catalog maintains full documentation explaining the lineage of enhanced datasets from origin through augmentation, steaming transparency into the analytics development lifecycle.

Audit trails in Ask Data track interactions with its natural language AI, explaining each translation rationale visually for end-user confirmation. Explain Data shows drivers of model predictions to facilitate choice.

As frontline architects, developers play a crucial role in upholding ethics through governance policies operationalizing values into technical models.

Our code defines allowed data usage, ensures fairness by identifying biases, implements access protocols securing privacy, and enables clear explanations empowering human oversight over automation recommendations.

Building such intelligence responsibly and inclusively remains imperative.

Pioneering an Analytics-Infused Cultural Revolution

The data paradigm shift emerges from more than just leading-edge tools—underlying mindsets require evolution too.

Developers cultivate analytics culture by empowering users, not through restrictive centralized gatekeeping but via democratization, guidance, and governance boosting competency. Our strategies set the tone for data’s expansive possibilities.

Tableau pioneers such cultural change by example. Its trusted community of over 1 million data enthusiasts openly share learnings, celebrating members who pay it forward.

Hyperships and Makeathon hackathons incubate ideas for solving real problems, while ambassadors and meetups encourage skill-building grounded in real-world use cases.

As analytics permeates across the enterprise, perspective grows more cross-functional versus top-down.

As analytics capabilities accelerate, developers help users align skill-building with emerging toolkits.

For instance, the Einstein Discovery workspace allows hands-on exploration of AI models to guide learning.

Ask Data trains its NLP with every query while explaining the logic behind suggestions.

Barriers to entry lower as technology automates rote work, allowing more employees to participate actively in advancing analytics frontiers.

Developer journey to the cloud signals to usher your organization into the future. Businesses will demand this agility. Are your skills keeping pace?

The pace of analytics innovation is advancing exponentially thanks to AI, big data, and cloud technologies reinventing what’s possible.

Advancing Analytical Literacy via AI Training

Expanding analytics at scale hinges equally on technology capabilities and human competencies leveraging them.

Tableau pioneers uplifting analytical literacy for all users via Ask Data, its natural language-powered data assistant.

Ask Data accepts queries in conversational language, understands intent using AI behind the scenes, and then auto-generates the appropriate data visualization responding to the question.

Every interaction with Ask Data continuously trains the machine learning model even without data skillsets.

Over time the platform aligns with the vocabulary and style of cross-functional teams by business function, seniority, or geography improving interpretability for precise responses. Despite no code simplicity, capabilities under the hood are profoundly complex.

Developers will discover exciting possibilities for embedding analytical training features directly into custom applications.

Ask Data also offers a web authoring SDK and REST API allowing other apps to leverage its NLP via simple integration.

End users query data naturally while gaining exposure interacting with AI, increasing their analytics comfort levels – a high-leverage combination for unlocking human potential!

The future of analytics goes far beyond just enabling self-service charts and dashboards.

Tableau is pioneering the vision of an end-to-end platform facilitating skill building while making AI accessible.

Developers will play a crucial role in nurturing this analytical awakening within our organizations!

New capabilities democratize data science, unleash real-time insights, and provide unmatched flexibility—but only if we as developers embrace continuous learning to elevate our skills.

Make it your personal and professional goal to explore one new Tableau platform capability monthly.

Together, let’s architect analytics systems that empower our organization’s highest ambitions. What emerging skill are you excited to build next?